Publications

Conference and Journal articles

2024

-

Workspace Optimization Techniques to Improve Prediction of Human Motion During Human-Robot CollaborationYi-Shiuan Tung, Matthew B. Luebbers, Alessandro Roncone, and Bradley HayesProceedings of the 2024 ACM/IEEE International Conference on Human-Robot Interaction, 2024

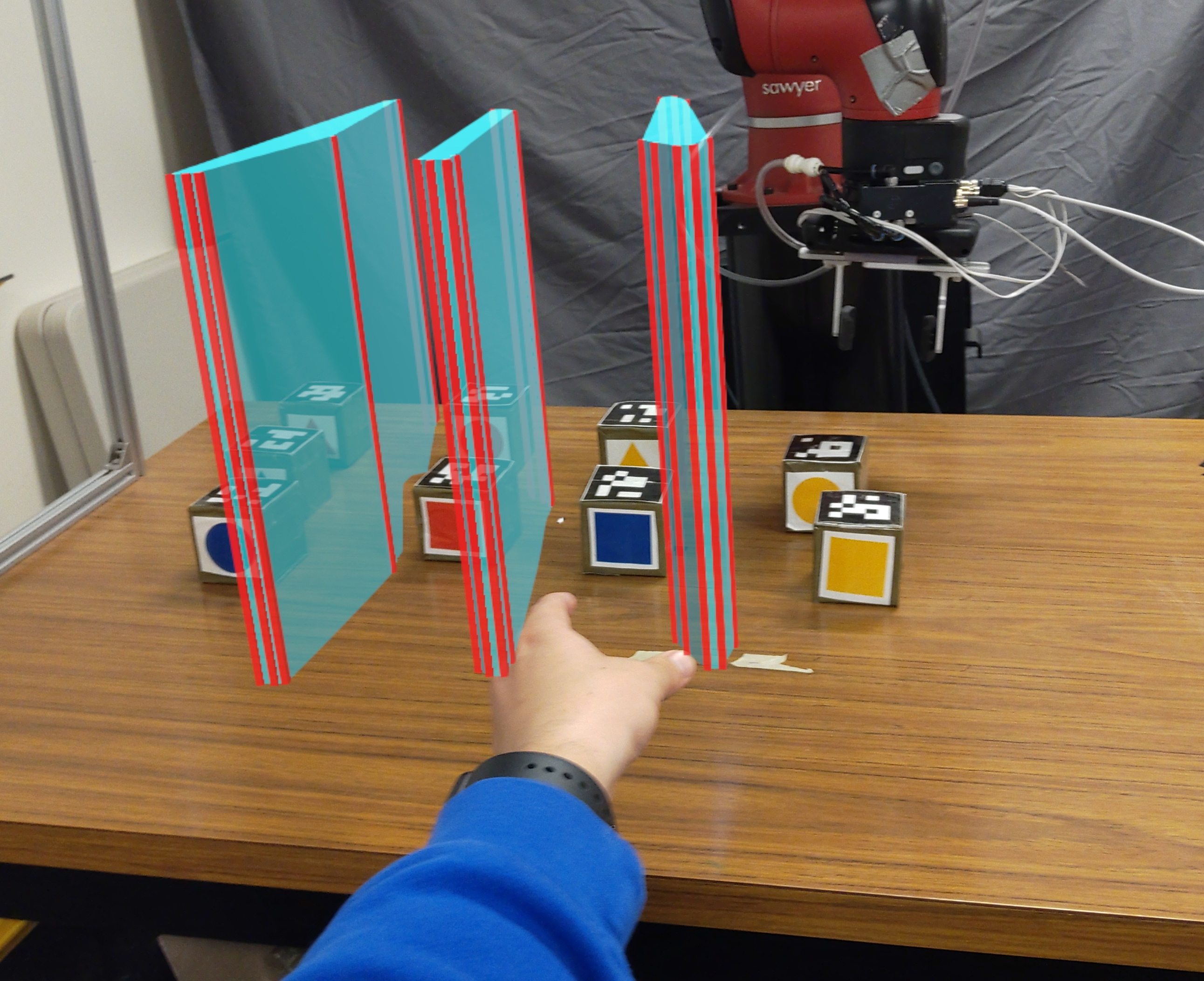

Workspace Optimization Techniques to Improve Prediction of Human Motion During Human-Robot CollaborationYi-Shiuan Tung, Matthew B. Luebbers, Alessandro Roncone, and Bradley HayesProceedings of the 2024 ACM/IEEE International Conference on Human-Robot Interaction, 2024Understanding human intentions is critical for safe and effective human-robot collaboration. While state of the art methods for human goal prediction utilize learned models to account for the uncertainty of human motion data, that data is inherently stochastic and high variance, hindering those models’ utility for interactions requiring coordination, including safety-critical or close-proximity tasks. Our key insight is that robot teammates can deliberately configure shared workspaces prior to interaction in order to reduce the variance in human motion, realizing classifier-agnostic improvements in goal prediction. In this work, we present an algorithmic approach for a robot to arrange physical objects and project “virtual obstacles” using augmented reality in shared human-robot workspaces, optimizing for human legibility over a given set of tasks. We compare our approach against other workspace arrangement strategies using two human-subjects studies, one in a virtual 2D navigation domain and the other in a live tabletop manipulation domain involving a robotic manipulator arm. We evaluate the accuracy of human motion prediction models learned from each condition, demonstrating that our workspace optimization technique with virtual obstacles leads to higher robot prediction accuracy using less training data.

@article{tung2024hri, title = {Workspace Optimization Techniques to Improve Prediction of Human Motion During Human-Robot Collaboration}, author = {Tung, Yi-Shiuan and Luebbers, Matthew B. and Roncone, Alessandro and Hayes, Bradley}, year = {2024}, journal = {Proceedings of the 2024 ACM/IEEE International Conference on Human-Robot Interaction}, }

2022

-

Bilevel Optimization for Just-in-Time Robotic Kitting and Delivery via Adaptive Task Segmentation and SchedulingYi-Shiuan Tung, Kayleigh Bishop, Bradley Hayes, and Alessandro Roncone31st IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), 2022

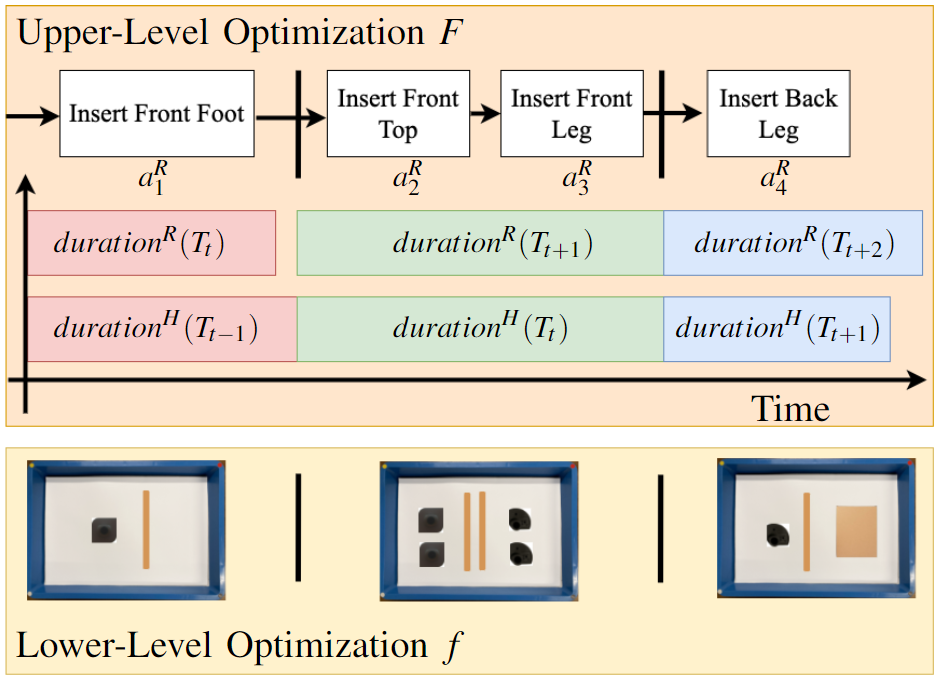

Bilevel Optimization for Just-in-Time Robotic Kitting and Delivery via Adaptive Task Segmentation and SchedulingYi-Shiuan Tung, Kayleigh Bishop, Bradley Hayes, and Alessandro Roncone31st IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), 2022Kitting refers to the task of preparing and grouping necessary parts and tools (or "kits") for assembly in a manufacturing environment. Automating this process simplifies the assembly task for human workers and improves efficiency. Existing automated kitting systems adhere to scripted instructions and predefined heuristics. However, given variability in the availability of parts and logistic delays, the inflexibility of existing systems can limit the overall efficiency of an assembly line. In this paper, we propose a bilevel optimization framework to enable a robot to perform task segmentation-based part selection, kit arrangement, and delivery scheduling to provide custom-tailored kits just in time—i.e., right when they are needed. We evaluate the proposed approach both through a human subjects study (n=18) involving the construction of a flat-pack furniture table and shop-flow simulation based on the data from the study. Our results show that the just-in-time kitting system is objectively more efficient, resilient to upstream shop flow delays, and subjectively more preferable as compared to baseline approaches of using kits defined by rigid task segmentation boundaries defined by the task graph itself or a single kit that includes all parts necessary to assemble a single unit.

@article{tung2022kitting, title = {Bilevel Optimization for Just-in-Time Robotic Kitting and Delivery via Adaptive Task Segmentation and Scheduling}, author = {Tung, Yi-Shiuan and Bishop, Kayleigh and Hayes, Bradley and Roncone, Alessandro}, year = {2022}, journal = {31st IEEE International Conference on Robot and Human Interactive Communication (RO-MAN)}, url = {https://ieeexplore.ieee.org/document/9900670}, } -

PokeRRT: Poking as a Skill and Failure Recovery Tactic for Planar Non-Prehensile ManipulationAnuj Pasricha, Yi-Shiuan Tung, Bradley Hayes, and Alessandro RonconeIEEE Robotics Automation and Letters, 2022

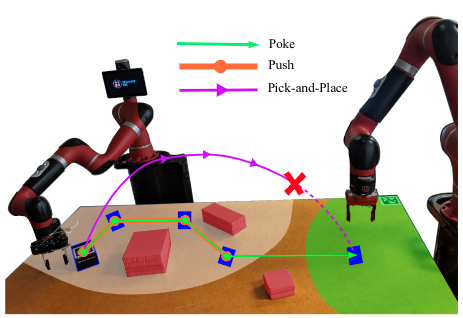

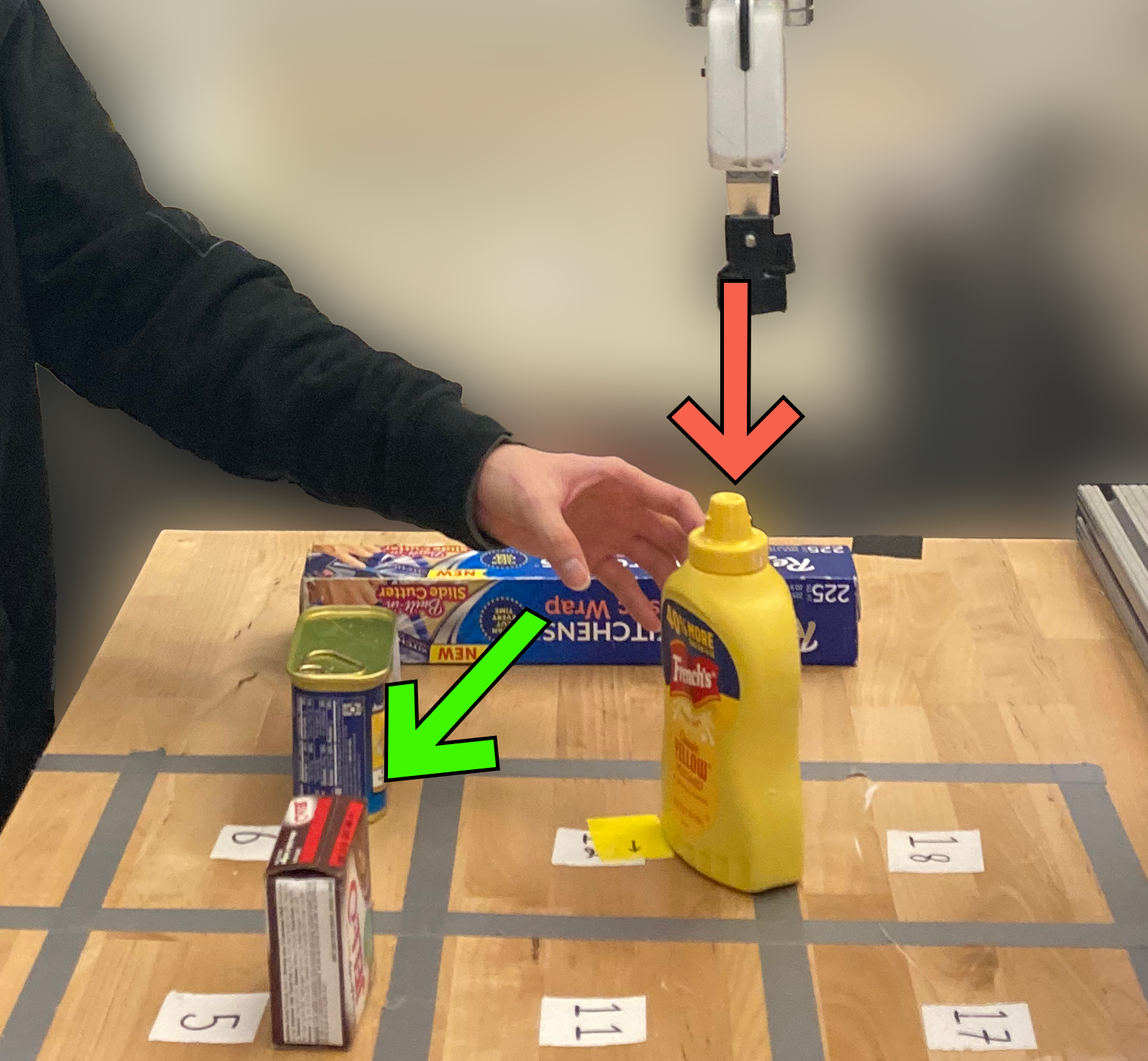

PokeRRT: Poking as a Skill and Failure Recovery Tactic for Planar Non-Prehensile ManipulationAnuj Pasricha, Yi-Shiuan Tung, Bradley Hayes, and Alessandro RonconeIEEE Robotics Automation and Letters, 2022In this work, we introduce PokeRRT, a novel motion planning algorithm that demonstrates poking as an effective nonprehensile manipulation skill to enable fast manipulation of objects and increase the size of a robot’s reachable workspace. We showcase poking as a failure recovery tactic used synergistically with pickand-place for resiliency in cases where pick-and-place initially fails or is unachievable. Our experiments demonstrate the efficiency of the proposed framework in planning object trajectories using poking manipulation in uncluttered and cluttered environments. In addition to quantitatively and qualitatively demonstrating the adaptability of PokeRRT to different scenarios in both simulation and real-world settings, our results show the advantages of poking over pushing and grasping in terms of success rate and task time.

@article{pasricha2021pokerrt, title = {PokeRRT: Poking as a Skill and Failure Recovery Tactic for Planar Non-Prehensile Manipulation}, author = {Pasricha, Anuj and Tung, Yi-Shiuan and Hayes, Bradley and Roncone, Alessandro}, year = {2022}, journal = {IEEE Robotics Automation and Letters}, }

Workshop papers

2025

-

CRED: Counterfactual Reasoning and Environment Design for Active Preference LearningYi-Shiuan Tung, Bradley Hayes, and Alessandro RonconeIn RSS’25 Human-in-the-Loop Robot Learning: Teaching, Correcting, and Adapting, Jun 2025

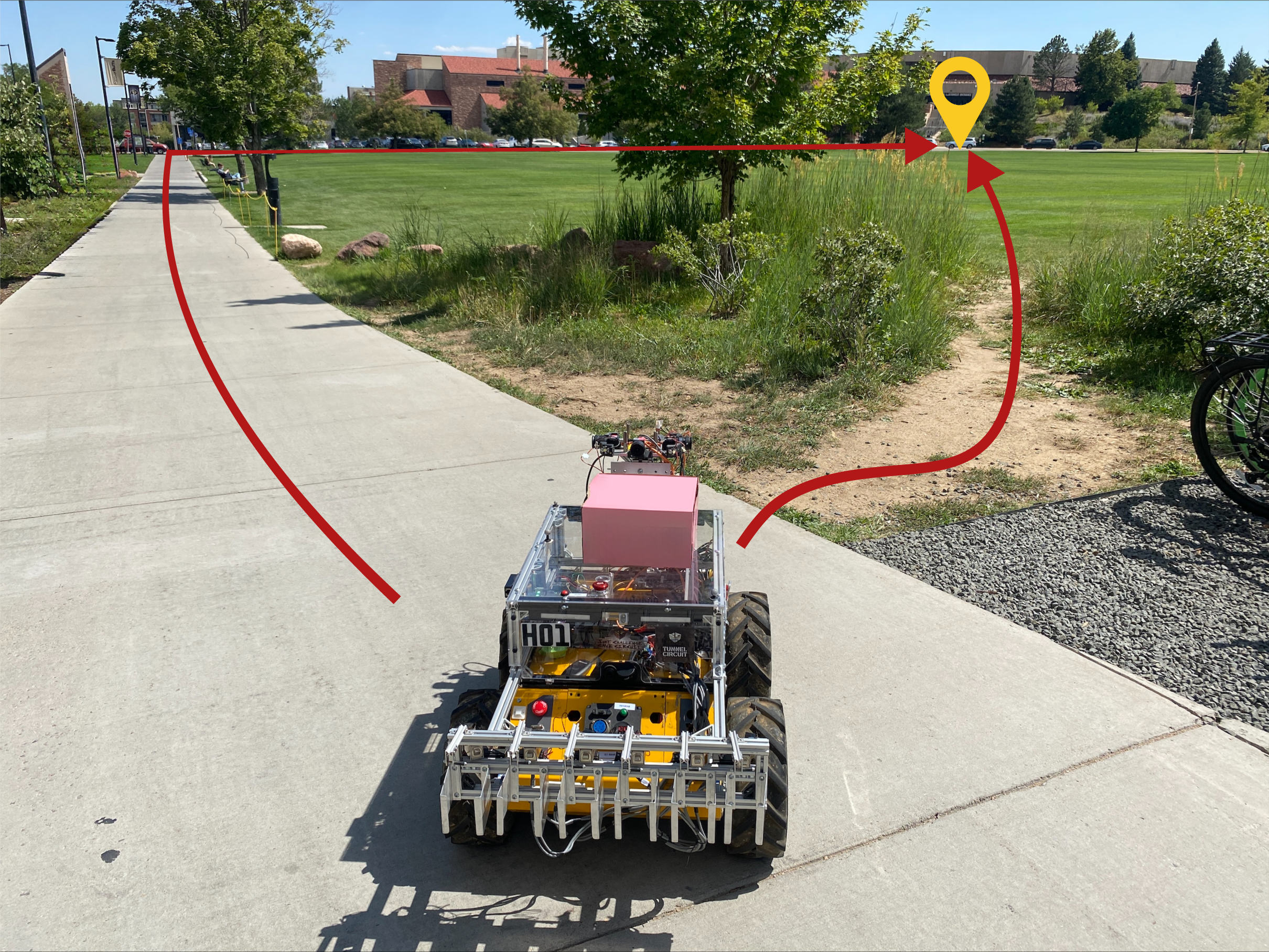

CRED: Counterfactual Reasoning and Environment Design for Active Preference LearningYi-Shiuan Tung, Bradley Hayes, and Alessandro RonconeIn RSS’25 Human-in-the-Loop Robot Learning: Teaching, Correcting, and Adapting, Jun 2025For effective real-world deployment, robots should adapt to human preferences, such as balancing distance, time, and safety in delivery routing. Active preference learning (APL) learns human reward functions by presenting trajectories for ranking. However, existing methods often struggle to explore the full trajectory space and fail to identify informative queries, particularly in long-horizon tasks. We propose CRED, a trajectory generation method for APL that improves reward estimation by jointly optimizing environment design and trajectory selection. CRED “imagines” new scenarios through environment design and uses counterfactual reasoning–by sampling rewards from its current belief and asking “What if this reward were the true preference?”–to generate a diverse and informative set of trajectories for ranking. Experiments in GridWorld and real-world navigation using OpenStreetMap data show that CRED improves reward learning and generalizes effectively across different environments.

2024

-

Stereoscopic Virtual Reality Teleoperation for Human Robot Collaborative Dataset CollectionYi-Shiuan Tung, Matthew B. Luebbers, Alessandro Roncone, and Bradley HayesIn 7th International Workshop on Virtual, Augmented, and Mixed-Reality for Human-Robot Interactions (VAM-HRI), Mar 2024

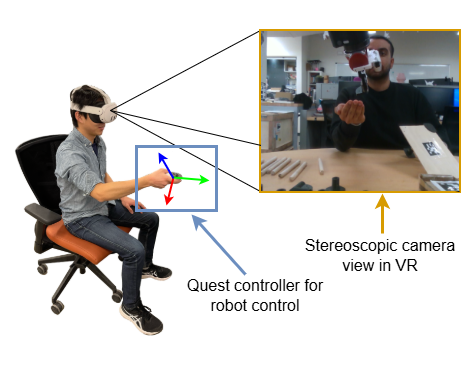

Stereoscopic Virtual Reality Teleoperation for Human Robot Collaborative Dataset CollectionYi-Shiuan Tung, Matthew B. Luebbers, Alessandro Roncone, and Bradley HayesIn 7th International Workshop on Virtual, Augmented, and Mixed-Reality for Human-Robot Interactions (VAM-HRI), Mar 2024Large and diverse datasets are required to train general purpose models in NLP, computer vision, and robot manipulation. However, existing robotics datasets have single robots interacting in a static environment whereas in many real world scenarios, robots have to interact with humans or other dynamic agents. In this work, we present a virtual reality (VR) teleoperation system to enable data collection for human robot collaborative (HRC) tasks. The human operator using the VR system receives an immersive and high fidelity egocentric view with a stereoscopic depth effect, providing the situational awareness required to teleoperate the robot remotely to perform various tasks. We propose to collect data on a set of HRC tasks and introduce a taxonomy to categorize the tasks. We envision that our VR system will broaden the scope of tasks robots can perform with human collaborators and that the proposed dataset will enable the development of new algorithms for HRC.

-

Causal Influence Detection for Human Robot InteractionYi-Shiuan Tung, Himanshu Gupta, Wei Jiang, Bradley Hayes, and 1 more authorIn HRI ’24: Workshop on Causal Learning for Human-Robot Interaction (Causal-HRI), Mar 2024

Causal Influence Detection for Human Robot InteractionYi-Shiuan Tung, Himanshu Gupta, Wei Jiang, Bradley Hayes, and 1 more authorIn HRI ’24: Workshop on Causal Learning for Human-Robot Interaction (Causal-HRI), Mar 2024In human-robot and multi-agent interaction, the ego agent models the influence of its actions on the actions of the other agents to better anticipate what the other agents will do next, facilitating effective collaboration and enhanced safety. Prior work assumes that the ego agent has influence in all states when in reality the influence is only present in a subset of the scenarios. In this work, we propose to detect causal influence by measuring the mutual information of the ego agent’s actions and the other agent’s actions. We evaluate our approach in a simulated pedestrian navigation and a collaborative cooking game. Our results show that causal influence detection is a promising approach, yet it may yield low accuracy in situations where there is insufficient data.

2023

-

Improving Human Legibility in Collaborative Robot Tasks through Augmented Reality and Workspace PreparationYi-Shiuan Tung, Matthew B. Luebbers, Alessandro Roncone, and Bradley HayesIn , Mar 2023

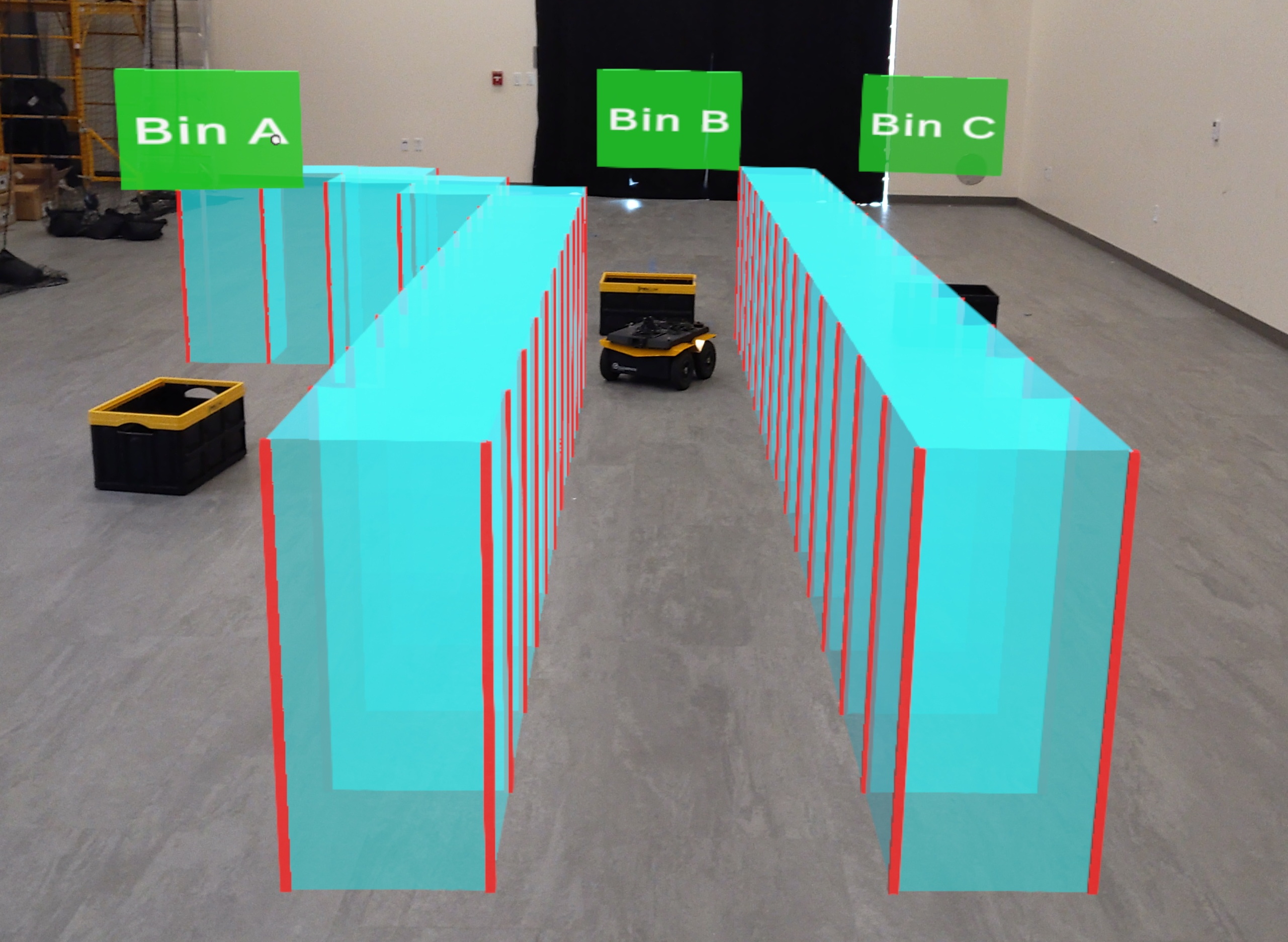

Improving Human Legibility in Collaborative Robot Tasks through Augmented Reality and Workspace PreparationYi-Shiuan Tung, Matthew B. Luebbers, Alessandro Roncone, and Bradley HayesIn , Mar 2023Understanding the intentions of human teammates is critical for safe and effective human-robot interaction. The canonical approach for human-aware robot motion planning is to first predict the human’s goal or path, and then construct a robot plan that avoids collision with the human. This method can generate unsafe interactions if the human model and subsequent predictions are inaccurate. In this work, we present an algorithmic approach for both arranging the configuration of objects in a shared human-robot workspace, and projecting virtual obstacles in augmented reality, optimizing for legibility in a given task. These changes to the workspace result in more legible human behavior, improving robot predictions of human goals, thereby improving task fluency and safety. To evaluate our approach, we propose two user studies involving a collaborative tabletop task with a manipulator robot, and a warehouse navigation task with a mobile robot.

Thesis

2018

-

Simulation and Analytical Models of Flexible, Robotic Automotive Assembly LineYi-Shiuan Tung, Michael Kelessoglou, Matthew Gombolay, and Julie ShahMar 2018

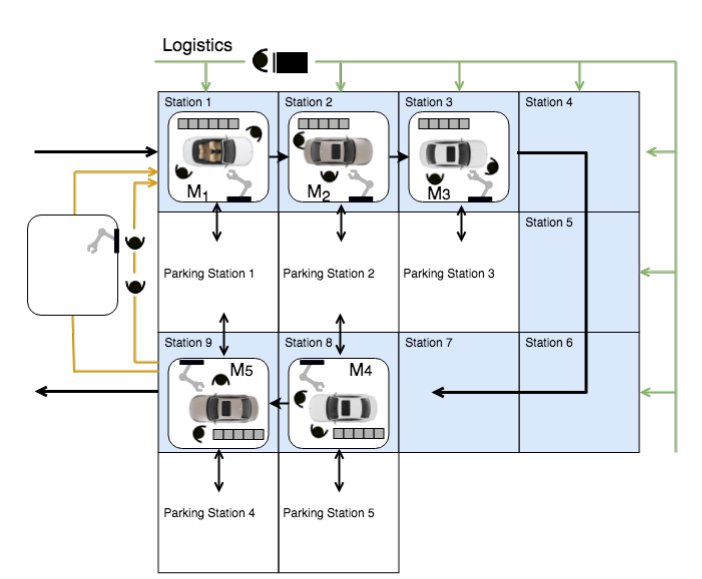

Simulation and Analytical Models of Flexible, Robotic Automotive Assembly LineYi-Shiuan Tung, Michael Kelessoglou, Matthew Gombolay, and Julie ShahMar 2018Seeking to adapt to a rapidly changing market, the automotive industry is interested in flexible assembly lines that can handle disruptions resulting from machine failures, scheduling changes, or stochastic task times. In this paper, we propose a layout for transporting cars that incorporates mobile robotic platforms capable of moving off of the assembly line when disruptions occur. We use discrete event simulation to analyze the throughput of our flexible layout on a segment of an automotive assembly line, with the results indicating average speed improvements of 26% and 36% compared with a conventional layout for a single band and two bands with a finite buffer, respectively. In addition, we study the robustness of the flexible layout in the presence of additional inefficiencies inherent in the adoption of new technologies. Next, we present analytical models for throughput analyses of both layouts. We improve upon previous two-machine line analytical models by augmenting the state space to model every machine in a band, and report that the discrete models best approximate the throughput in most cases.

- Analytical and simulation models for flexible, robotic automotive assembly linesYi-Shiuan TungMar 2018

To adapt to the rapidly changing market, the automotive industry is interested in flexible assembly lines that can handle disruptions due to machine failures or schedule changes. In this thesis, we propose a new assembly line layout (flexible layout) that uses mobile, robotic platforms to transport cars, which can move out of the line when disruptions occur to prevent blocking other cars on the line. We use discrete event simulation and analytical models to analyze the throughput of the new layout in a single band and two bands with a finite buffer. The simulation results show that the new layout achieves an average of 25.6% and 35.9% speed up over the conventional layout for the single band and two bands cases respectively. We improve previous two-machine line analytical models by augmenting the state of the Markov chain to model every machine in a band. We show that the augmented discrete Markov chain model predicts the throughput within 13% and 18% of the simulation throughput for the conventional and flexible layouts respectively. We further evaluate whether the flexible layout can improve its throughput by learning a policy for parking the platforms. By modeling the agents as independent learners, we apply single-agent reinforcement learning algorithms and show that the policy learned works well but suffers from lack of coordination.